Built a Web Crawler: Because Stalking the Internet is a Skill

I really wanted to know how a web crawler works, so with this spark, I decided to build my own simple web crawler. Furthermore, I had to submit my final graduation project, so I decided a web crawler would be good. In this post I will tell you how my web crawler works in the simplest form. Also, I would like to mention that I am not concerned about the performance right now because I am learning how it works. But I would love to hear your suggestions about the improvement in performance.

Features:

Below are the features that this system design will cover. Also I have implemented most of these features so you can test my code in your local machine also.

Web Crawling

Parallel Web Crawling (Distributed)

Document Parsing

Breadth First Search

Microservice Architecture:

I built this project on microservice architecture so that each service performs its functionality, and later I can scale it easily. Let's discuss the three most important services of this project.

1. Crawl Manager

Crawl Manager service acts as a master service that tells other crawling services which page to crawl. Below are the functionalities that it has.

API Endpoint: It exposes an API endpoint that client can hit to perform a new crawl request.

URL Frontier: As its name suggests, it acts as a queue for the URLs that need to be crawled.

Robots.txt Scanner: Our crawler needs to obey rules mentioned in a special file called robots.txt, which is present in the root of a domain. This file tells our crawler which page is not allowed to crawl. Although we can crawl it too but it is not an ethical way.

Message Queue: We send our URLs to crawler services via message queues such as Kafka, RabbitMQ or Redis Pub/Sub. Crawler service Send the response back to the Crawler Manager service for other processing, such as storage and indexing.

2. Crawler

It is responsible for fetching the HTML from the webpage and then passing it into our desired format.

Web Scrapper: The web scraper is responsible for fetching the webpage and its content.

Content Parser: We then pass fetched HTML page to a functionality that will remove stop words such as 'a, I, the, and etc.' These are the commonly used words that do not have valuable weight in the rankings of a web page and also convert it into desired JSON format for storing.

Breadth First Search: We follow breadth-first search. That means while passing a webpage, if a URL is encountered, then we are going to send that URL to our crawl manager. So that our crawl manager can send it again to the crawler service. This allows us to crawl many URLs without explicitly mentioning them. Furthermore, this is how real web crawlers work.

Design Diagram

URL Frontier

It is responsible for telling the crawler service which URLs to crawl. It receives a list of URLs from the client and then verifies if that URL should be sent to the crawler or not. It performs several checks, such as:

Has that URL already been crawled?

Has that URL been recently crawled?

Is that URL allowed by robots.txt?

Are we performing too many requests to the same server and forcing it to believe that we are spam?

etc.

Once it validated all these things, it sent that URL to the crawler service.

Robots.txt File

It is a special file that is present in the root of every web server. The reason for the existence of this file is that it tells robots such as our web crawler what policies to obey while crawling that. Here is the basic yet fully functional structure for robots.txt:

User-agent: BLEXBot

Disallow: /

User-agent: Twitterbot

Disallow:

User-agent: *

Disallow: /action/

Disallow: /publish

Disallow: /sign-in

Disallow: /channel-frame

Disallow: /visited-surface-frame

Disallow: /feed/private

Disallow: /feed/podcast/*/private/*.rss

Disallow: /subscribe

Disallow: /lovestack/*

Disallow: /p/*/comment/*

Disallow: /inbox/post/*

Disallow: /notes/post/*

Disallow: /embed

User-agent: facebookexternalhit

Allow: /

Allow: /subscribe

SITEMAP: https://beyondthesyntax.substack.com/sitemap.xml

SITEMAP: https://beyondthesyntax.substack.com/news_sitemap.xml

There are two main headings to focus here. First is User-Agent and second is Disallow. User-Agent is a header that website or server uses to identify the application that is connecting to it. Above robots.txt don't want anyone to crawl pages such as /action/, /publish, /sign-in and etc. So we need to configure our crawler in such a way that it follow policies that are mentioned in this file.

Web Scrapper

For this I am using Colly, as I have built this in Golang, and I don't know that much of Python. But if you know how to scrape pages in Python, then you should obviously go with that language because it provides many more features.

Below is a very basic code sample for what a scrapped HTML would look like.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Sample Web Page</title>

</head>

<body>

<header>

<h1>Breaking News</h1>

<nav>

<ul>

<li><a href="/home">Home</a></li>

<li><a href="/about">About</a></li>

<li><a href="/contact">Contact</a></li>

</ul>

</nav>

</header>

<main>

<article>

<h2>Latest Technology Updates</h2>

<p>The tech industry is evolving rapidly with new advancements in AI, cloud computing, and cybersecurity.</p>

<img src="ad-banner.jpg" alt="Advertisement">

</article>

</main>

<aside>

<h3>Sponsored Content</h3>

<p>Check out this amazing new product!</p>

</aside>

<footer>

<p>© 2025 Tech News</p>

</footer>

</body>

</html>

Parser

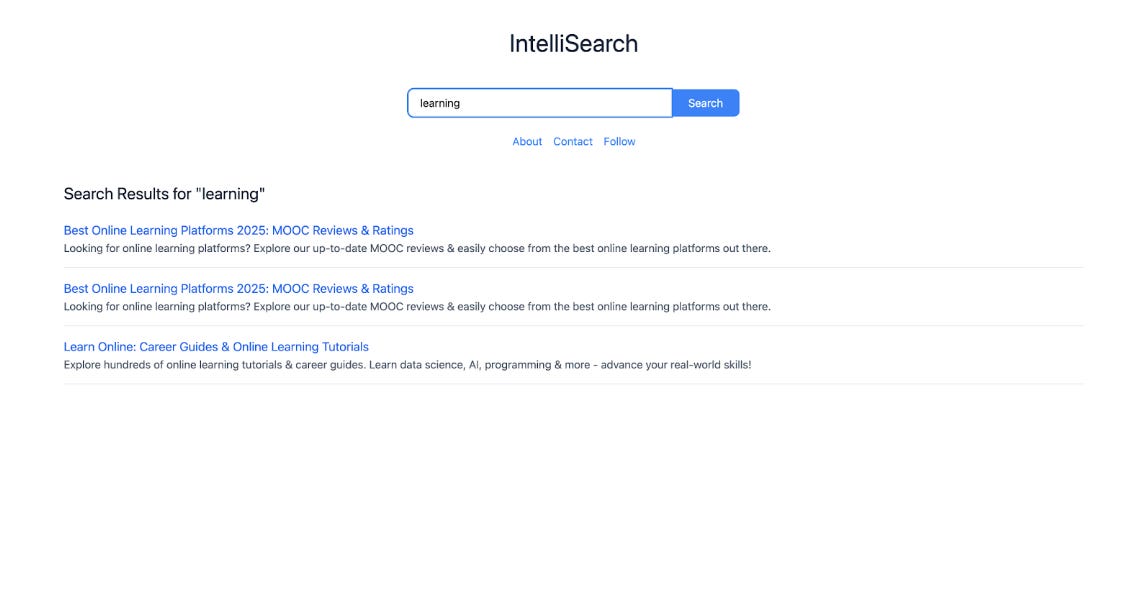

Once we have scrapped our webpage, then our task is to get useful stuff from it and ignore the rest. I crawled a web page from Bitdegree and here is the parsed version of the page.

All of this is fetched from the HTML of the page. You can easily find a library to traverse an HTML page in your favourite programming language. You have to reverse the page node by node and filter out the most important stuff as shown in the above image and store it for later processing.

Source Code

Here is a basic and elastic implementation of a web crawler. I made it for fun, so it is not very good code, but it can be improved. Furthermore, it is missing some components, but I am implementing them in my spare time. Although it is completely functional.

Challenge

One thing that I did not talk about in this post is domain-based throttling. I am encouraging you to design a simple domain-based throttling component on your own. Its simple task is to prevent our web traveller from making excessive requests to save the domain and not get flagged as spam.

Bye

I hope that you found this post informational. You can subscribe to this newsletter and receive the latest posts directly in your inbox. It is completely free, and I promise we will never spam you. Thank you and have a nice day.