Generating Unique IDs in Distributed System

Hi, everyone! This week I'm diving deep into databases. This post is all about how we can generate unique IDs in a distributed environment.

Before starting, I would like to say that Beyond They Syntax is now a family of 110 members. You can also become a member by subscribing for free. Thanks to those 110 people for being members.

If we have a database for a single server, then we don't need to bother about unique IDs because the auto-increment primary key will work fine. But in the case of a distributed environment where a database is sharded between multiple services, the same ID can be used in all the database instances. When I say 'ID', it means 'primary key'.

What problem can the same ID cause?

Ambiguity: If an ID is present in multiple shards of a DB, then our application might query the wrong shard and bring us incorrect data. For example, if a row with ID "1" exists in three shards, "A", "B" and "C", and our application is set to query shard "A" by default, then incorrect data may be shown.

Integrity Issues: If we need to move or merge shorts data, conflict makers, which will lead to either overriding one record with another, our system may fail to insert data due to primary key violation.

Solution

Multi-Master Replication

The solution for this problem is just a simple trick. We will use the database auto-increment feature, but instead of incrementing the primary key by 1, we will increment it by k, where k is the number of database servers in use. This way, any two shards will never have the same primary.

For example, in a 3-node setup:

Node 1 creates IDs like:

1, 4, 7, 10...Node 2 creates:

2, 5, 8, 11...Node 3 creates:

3, 6, 9, 12...

But this approach comes with some drawbacks.

It is hard to scale when there are multiple data centers.

This approach will cause issues when a server is added or removed.

UUIDs

The universally unique identifier is a 128 bits number which is used to identify data in computer systems. A total of 5.3 x 10^36 unique UUIDs can be generated. Because of this high number, there is a very low chance of getting collisions. According to a Wikipedia article, if you keep generating 1 billion UUIDs every second for 100 years, there is a slight chance of generating a single duplicate UUID.

Pros:

No coordination required

Easy to generate in any language

Cons:

UUIDs are long and random, which leads to poor index locality in databases like PostgreSQL or MySQL (hurts performance).

Hard to sort by time.

The main drawback of this approach is that using UUID as a primary key will degrade database performance. If you want to read more, you can refer to this awesome article.

I also have a fun fact for you. Check out this website. It contains a list of all the UUIDs that can be generated.

Ticket Server

A ticket server is a central service used in distributed systems to generate unique IDs. Whenever a service needs a new ID, it sends a request to the ticket server, which responds with the next available number. This approach guarantees uniqueness and keeps things simple. However, since all ID requests go through a single point, it can become a bottleneck or a single point of failure. To improve performance, some systems request a batch of IDs at once. If you're interested, there are some really cool open-source implementations you can explore online!

It comes with several pros and cons:

✅ Pros:

Simple and reliable – One source of truth for generating unique IDs.

Globally unique IDs – No risk of ID collisions across services.

Easy to implement – Can be built with just a counter and an API.

Language-agnostic – Any service (Go, Python, Java, etc.) can request IDs through the API.

❌ Cons:

Single point of failure – If the ticket server goes down, no new IDs can be generated.

Scalability bottleneck – All services must talk to the same server, which can become a performance limit under high load.

Added network latency – Each ID request needs a network call.

Twitter Snowflake Approach

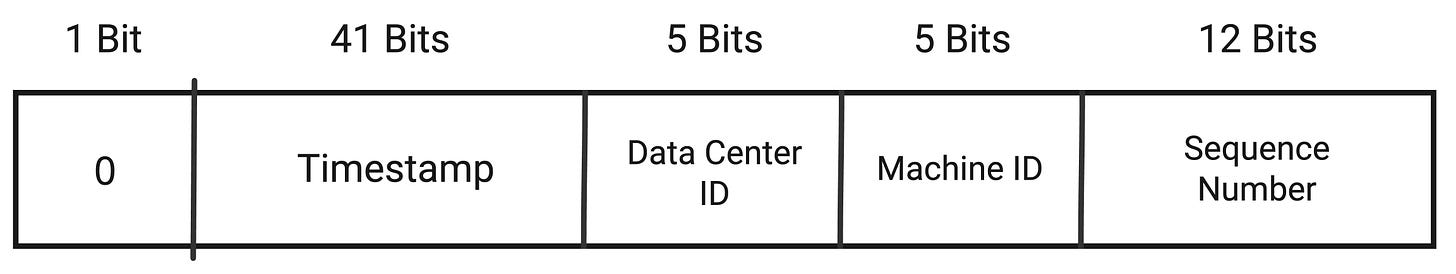

Twitter created their own ID generation system known as "Snowflake". It is the most advanced approach because the IDs generated with this approach are sortable and include time. Moreover, they are 64 bits, which is half of UUIDs (128 bits). Let's see how Twitter generates these IDs with the below diagram.

Zero Bit: This bit is not currently being used, but in the future it may be used to distinguish between signed and unsigned IDs.

Timestamp: The next 41 bits are four epoch timestamps. Snowflake's default epoch is equal to Nov 04, 2010, 01:42:54 UTC. This time is stored in milli-seconds.

Datacenter ID: This bit holds the Data Centre ID from where the Snowflake ID is being generated. It is five bits. So it can hold a maximum of 32 numbers.

Machine ID: Just like Data Centre ID, we also have machine ID. It can also have 32 numbers.

Sequence Number: The next 12 bits are for a sequential auto-increment number. Each time an ID is generated, this number is incremented by one, and it is stored locally on the machine.

12 bits can have 4,096 unique numbers, so theoretically this can generate 4,096 IDs per milli-second and a total of 4,194,304,000 IDs/second.

This approach can be modified in case of more than 32 data centers or 32 machines; we can combine these and create a single 10 bits of space which can hold 1024 machines.

Any Thought?

So these were the techniques I explored. In case I have missed something, you can let me know in the comments. Also, I'll keep posting new things in this newsletter regularly, so you can subscribe to this for free. Thanks, and have a nice day.